Evaluation Toolkit – Planning Your Evaluation

These questions will help you design your approach and make your evaluation as efficient and effective as possible with limited time and resources:

- What do I want to find out?

- How will I use that information?

- What resources (time/budget) do I have available?

- Should I consider working in partnership with others?

- Are there any ethical issues I need to consider?

- How will I analyse the data that I collect?

What do I want to find out?

Before starting any evaluation, it is important to have a clear idea about what it is you want to know, in as much detail as possible. This is similar to developing a research question.

Defining what you’re aiming to achieve from the evaluation at the start of the project really helps you focus your efforts, and identify what you do (and don’t) need to include. It also helps you avoid running into problems later on in the project.

For example, a question like ‘was this activity good?’ is much more challenging to answer (what do you mean by ‘good’?; for whom?) than ‘Did most of the participants recognise the term ‘black hole’ by the end of the activity?’. What you want to find out will determine the rest of the evaluation, especially the tools you use.

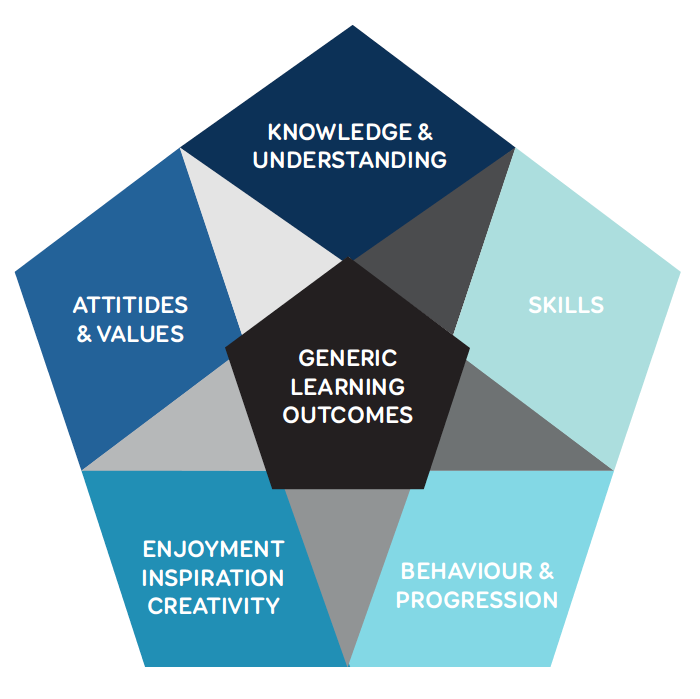

Remember that simply gaining knowledge may be only one possible outcome of your project. Your participants may, for example, also develop their communication skills, change their attitude to your organisation, be inspired by your work or make changes in their own work. The Generic Learning Outcomes framework from the Arts Council emphasises the range of emotional, attitudinal, skills and behavioural changes you might inspire in your participants.

The website includes examples of each type of outcome, as well as useful advice on how to apply the framework to your own project, and templates for recording and analysing both quantitative and qualitative data. We have also used this framework in our interactive tool selector to indicate which tools best suit the main categories of Generic Learning Outcomes.

How will I use that information?

It is often helpful to consider how you’ll use the evaluation findings before you start. Do you need to convince stakeholders of the value of the activity? Give feedback to funders? Make improvements in the activity?

If you’re primarily looking to make improvements, you might want to collect data that highlights which elements of an activity the participants found most engaging, or confusing. If you need to tell funders about who attended, you’ll need to gather demographic information. If you need to convince stakeholders of value, consider having a conversation with them in advance about the kind of data they might find convincing.

Many academics often ask if it is possible to publish their evaluation findings. The answer is yes! However, to ensure the results are sufficiently rigorous for academic publication you need to approach the task with all the detail and research background that you would for a normal academic publication. For example, your methodology and approach would need to be appropriately informed by existing published work, and your audience recruitment and sampling at a level appropriate to the journal you intend to publish in.

Deciding from the start that you hope to publish the findings therefore changes the level of depth and rigour that you need to take in developing your evaluation approach.

What resources (time/budget) do I have available?

It is important to be realistic when thinking about evaluation. Developing the evaluation tools, collecting data and analysis always take longer than you expect, especially when doing it for the first time. It is often better to set modest expectations (e.g. about how many people you might collect data from) and plan for high quality but on a small scale rather than collect data you don’t have time to analyse or data that is so messy it can’t help you answer your questions.

Even if you can only collect limited data (which happens a lot in evaluation), you can still gain useful insights. You can also make the most of limited resources by partnering with others and by modifying or re-using tools others have developed or you have used previously.

At the other end of the scale, for larger projects you may wish to consider hiring an external evaluator to act as an independent ‘critical friend’ for the project. Participants are often more honest when talking to someone they perceive as separate to the activity, and external evaluators may spot things (both positive and negative) that would not normally be noticed by a member of the project team.

Should I consider working in partnership with others?

Working with others is a great way to make the most of limited resources. For example, you could ask a colleague to distribute questionnaires or conduct snapshot interviews at your event and then return the favour. If there are others conducting similar activities to yours, you could work together to develop common evaluation tools and even collaborate on the analysis.

You may also want to consider whether what you are doing could be part of a larger project. Are there teachers, researchers, students or others who might be interested in it as a case study activity? Are there funders or other organisations who might be interested in your approach and/or the evidence you gather, and willing to share the costs of data collection and analysis?

Thinking creatively at an early stage might enable a much larger mutually beneficial evaluation to be achieved.

Are there any ethical issues I need to consider?

Although you will have considered many ethical issues in planning your activities (e.g. around safety, causing emotional upset and so forth), there are additional things to think about when planning your evaluation. In particular, collecting personally identifiable data (e.g. names, addresses, etc) can be very problematic in most countries for data protection reasons – so if you do not need to collect such data, then don’t. Opt for an anonymous approach instead, perhaps using categories (e.g. postal codes) rather than uniquely identifiable specifics. If you do need to collect personal information, be sure to inform participants how that information will be used and stored, and get their explicit consent for such uses.

Demographic questions (e.g. gender, age, race/ethnicity, social class data) can also make people uncomfortable or even cause offense. So unless you need this information and have a clear idea of why and how you will use it, it is again best not to collect it. If you do need to collect it, word the questions sensitively (e.g. asking people to choose which age bracket they fall into, rather than provide an exact age), perhaps using questions from previous evaluations and/or asking for other people’s opinions in reviewing questions that might be uncomfortable.

Working with children is also a common ethical consideration, as they can’t legally give their own consent for participation. Sometimes it is possible for the teacher (or the school) to give permission for you to distribute a questionnaire or speak to certain individuals, but more commonly you will need to seek parent/carer permission for their child to be involved. This obviously takes time and advance planning (as it needs to be done in advance of the event), but many schools will incorporate it into the process when they seek permission for the children to attend the event, so if you speak to the school or parents in advance then you can work out how best to proceed.

It is probably obvious but it is also important to be aware that no-one can be forced to participate in evaluation! You need to give people the opportunity to opt-out, whether by not completing a questionnaire, politely declining a request for interview, or being excluded from any observations that might take place. Fortunately this is a rare occurrence (especially if you’ve made it clear in advance how the data will be used and why you’re interested in collecting it), but do think about how you’ll handle the process of gently seeking participants’ permission to be involved, as well as what you’ll do if they decline.

Finally, try to make your evaluations as short and enjoyable as possible. Not only will this increase the amount of data you can collect, but you don’t want the evaluation to detract from engagement with your event or activity!

How will I analyse the data that I collect?

Again, it’s worth being realistic here, and thinking carefully about what skills and resources you have access to. If you’ve never done statistical analysis or thematic coding before then it could really help to review how those processes work before you conduct your evaluation, to be sure that they will provide what you want. For example, if you’re hoping to apply statistical analysis then that might affect the number of participants you need to collect data from (and how they are recruited).

If you’re planning to apply a ‘light touch’ analysis such as word clouds then that might mean you don’t need to collect demographic information. It might also suggest that you need to account for the time it will take to enter any handwritten data for computer analysis (see the section above on managing resources).

Back to Evaluation Toolkit homepage