Evaluation Toolkit – Recommended Resources

Within the Europlanet Evaluation Toolkit we’ve highlighted key content and practical tools that are most likely to be of interest to the majority of Europlanet members involved in delivering outreach activities. However there are plenty of other good evaluation resources available for anyone who wants to take this further. Listed below are some of the best we have come across.

Do feel free to suggest others by emailing Anita Heward, Europlanet Outreach Coordinator.

Contents

- General evaluation advice / strategies

- Evaluating activities in specific environments

- Clarifying your intended outcomes

- Additional suggested tools and approaches

- Online and social media

- Conducting detailed qualitative research

General evaluation advice / strategies

The Center for Advancement of Informal Science Education (USA) produced the Principal Investigator’s Guide, designed for project leaders. Not assuming any familiarity with evaluation, it provides strategic advice and detailed practical suggestions, including an extensive overview of the Logic Model approach. Also see informalscience.org for what is probably the largest collection of STEM outreach evaluation reports in the world.

The Royal Academy of Engineering (UK) provides a very readable and practical toolkit designed to support engineers and academics to evaluate their public engagement activity.

The User-Friendly Handbook for Project Evaluation is an updated version of a classic evaluation guide; this version was commissioned by the National Science Foundation (USA) in 2010.

The European Evaluation Society provides an excellent list of online evaluation books and handbooks, including a useful indication as to who each guide is most suited to.

The National Co-ordinating Centre for Public Engagement (UK) has compiled a comprehensive summary of evaluation resources suitable for use by higher education staff.

RCUK (Research Councils UK) commissioned this guide to public engagement evaluation, explicitly designed for use by academics and researchers.

There is a huge range of wider evaluation advice from outside the public engagement field. BetterEvaluation is one of the most extensive international resources, comprising 300+ evaluation options and various case studies.

Evaluating activities in specific environments

Each of the following resources focuses on one specific activity type or delivery environment.

The EU Directorate-General for Communication has published a toolkit for the evaluation of communication activities (2015). In particular there are separate sections on evaluating conferences, newsletters, websites, PR and press events, social media and smartphone activities.

The Cornell Lab of Ornithology (USA) has produced a guide to evaluating Citizen Science projects – whilst you do need to register to download it, it’s a very helpful resource if you’re at all involved in citizen science programmes. It’s also worth taking a look at their wider toolkit and resources for examples of evaluation tools that may be suitable to your needs.

Clarifying your intended outcomes

For your evaluation activity to be both useful and meaningful it is essential that you are clear about what you are trying to achieve: what outcomes (changes to participants) you intend.

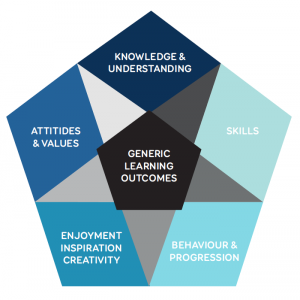

One of the clearest and most useful frameworks is the Generic Learning Outcomes, which emphasises the range of emotional, attitudinal, skills and behavioural changes that you might inspire in your participants, in addition to the more obvious knowledge gains:

The above website includes examples of each type of outcome, as well as useful advice on how to apply the framework to your own project, and templates for recording and analysing both quantitative and qualitative data. We have also used this framework in the final columns in our list of tools to explicitly indicate what sorts of tools best suit the main categories of Generic Learning Outcomes.

The EU-funded Space Awareness project is an example of a large-scale (international) outreach programme that applied the Generic Learning Outcomes to create a project-wide evaluation framework. This enabled robust comparison of the project results across a wide range of activity types and audiences, and a clear structure and scope for determining the overall impacts of the project (the final Space Awareness evaluation report is also available publicly).

Warning!!! It is much better to have a small number of prioritised, focused outcomes, rather than an extensive long list – the more outcomes, the more challenging they will be to evaluate!

Additional suggested tools and approaches

There are plenty of other evaluation tools available. Here we link to some of the best technique overviews that we have come across, as well as more detailed advice on specific tools that weren’t included in the Europlanet Evaluation Toolkit.

The National Coordinating Centre for Public Engagement (NCCPE, UK) hosts a document published by the Inspiring Learning for All framework which provides a great overview of the strengths and top tips when using a wide variety of different data collection tools.

The European Commission also produced a guide containing 12 separate evaluation tools, including interview, focus group, survey, expert panel, case study, context indicators, SWOT, multi-criteria analysis, and cost-effectiveness analysis.

Additionally, BetterEvaluation has produced a list (and descriptions) of a wide range of other potential tools, themed around where the data is obtained from (individuals, groups, observation, physical measurements or existing records and data).

Online and social media

Though there are other alternatives available, Google Analytics is the classic free website analysis software, providing a huge range of information about the online traffic to your site, as well as many useful tools and tips on how to get the most out of your analysis. If your site is fairly large and you’re interested in comparing it with other similar sites then SimilarWeb is a great free benchmarking tool providing automated analysis.

If you have a dedicated Facebook page then there is a built-in analytics tool called Insights which will give you all sorts of information about how your page content has been used, and by whom. Facebook also provides plenty of support advice on how to access specific Insights information.

Twitter and YouTube also have built-in analytics tools.

Conducting detailed qualitative research

Our initial scoping work suggested that detailed qualitative data collection techniques (such as interviews, focus groups and structured observations) were unlikely to be used by many Europlanet members, so we have not included them in the main toolkit. However, they are an extremely useful approach to gather useful in-depth information, so here are some particularly useful existing resource documents on this topic:

- Semi-structured interviews. Further useful advice on this topic is provided by QualPage and the Open University.

- Comparison of ‘good’ and ‘bad’ interview practice. These videos provide a useful practical demonstration of conducting interviews, and are relatively short (20 minutes total):

- Poor practice

- Better practice (though not always perfect!).

- Conducting focus groups. Citizens Advice has produced an excellent accessible guide on running small-group discussions.

- Conducting observations. Taylor-Powell & Steele have created a very practical guide to conducting observations, incorporating a wide range of logistical and conceptual issues, as well as including some example observation guides at the end.

- Personal meaning maps (PMMs). A very useful qualitative research tool, originally developed for use within museums but now applied extensively elsewhere. PMMs involve two open-ended interviews, conducted before and after an activity, with the participant drawing or writing their responses to a given prompt (key word). Suh (2010) provides a useful overview of how to apply PMMs in practice.

- Our section on thematic coding also includes some great links to other useful resources.